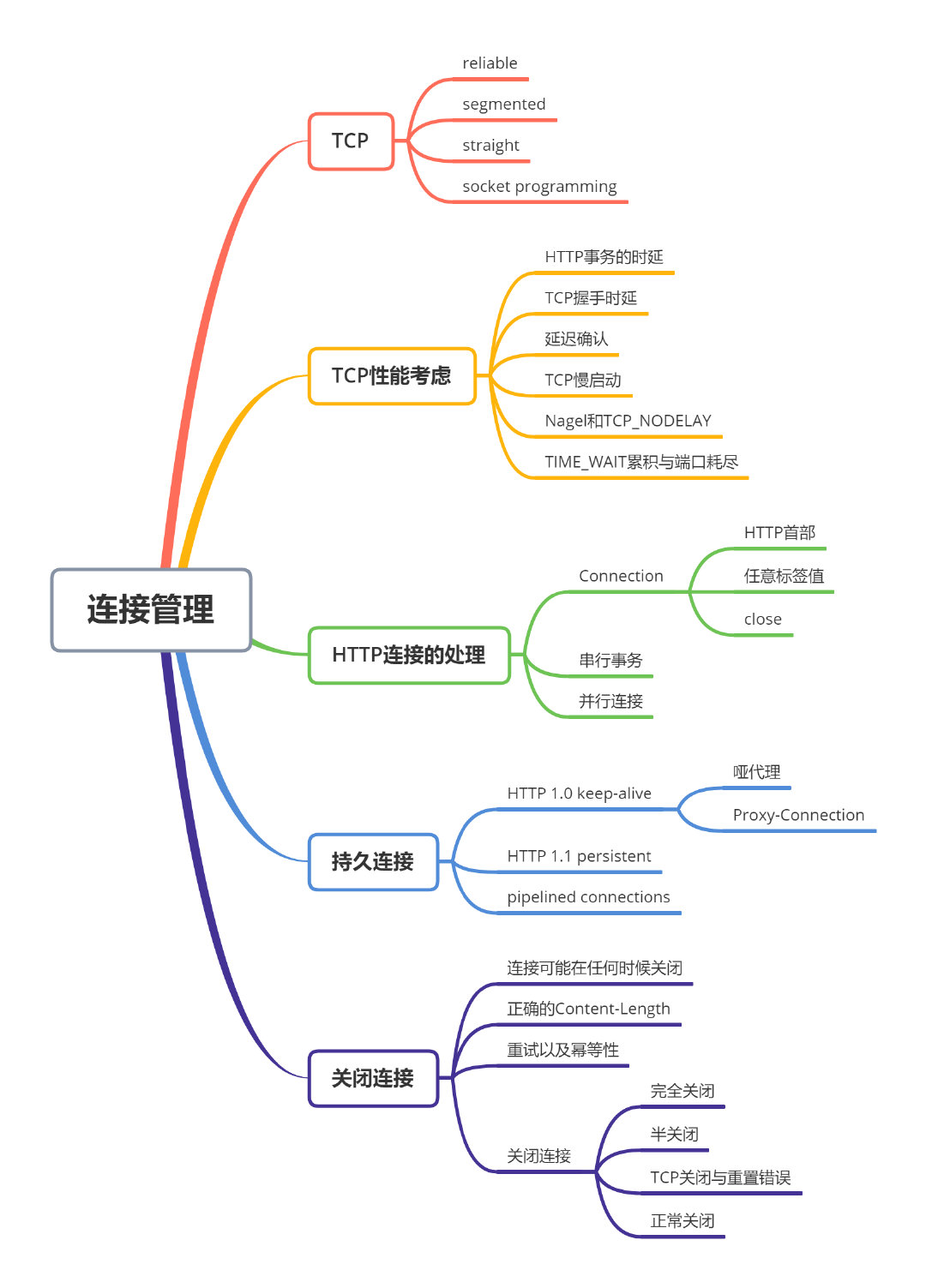

ch04: Connection Management

0. Guide

How HTTP uses TCP connections

Delays, bottlenecks and clogs in TCP connections

HTTP optimizaitons, including parallel, keep-alive, and pipelined connections

Dos and don'ts for managing connections

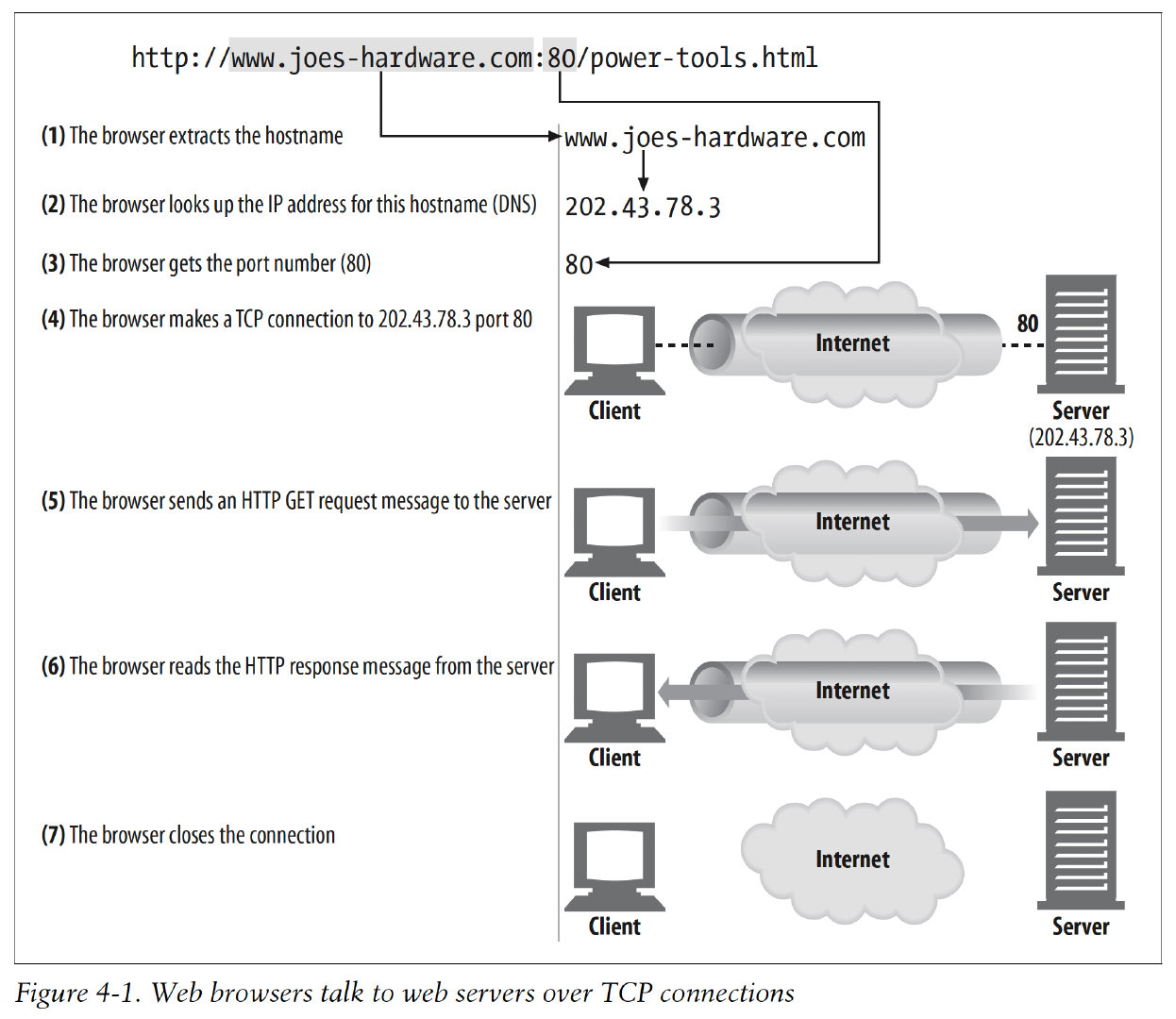

1. TCP Connections

Steps:

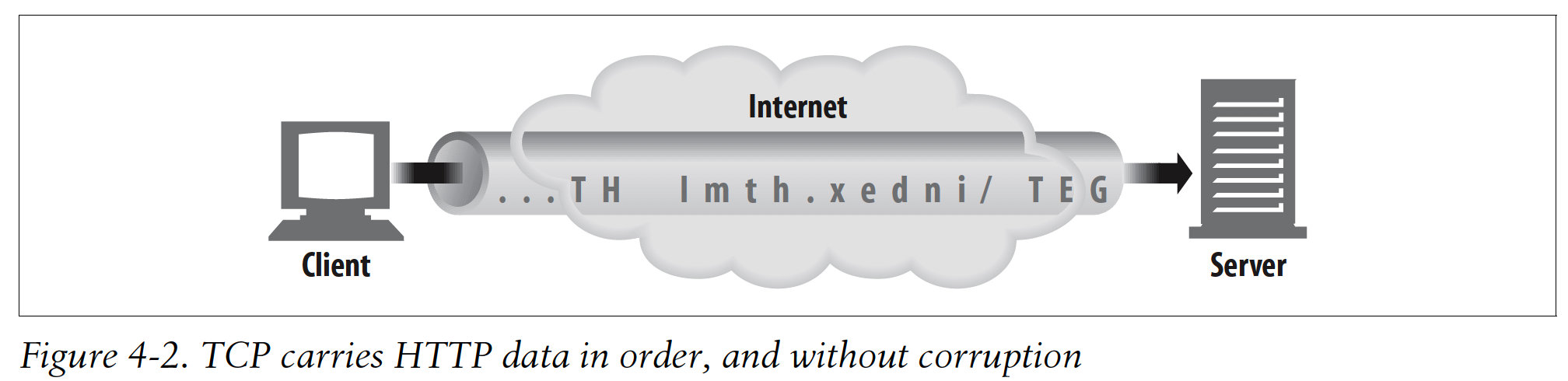

1.1 TCP Reliable Data Pipes

1.2 TCP Streams Are Segmented and Shipped by IP Packets

2. TCP Performance Considerations

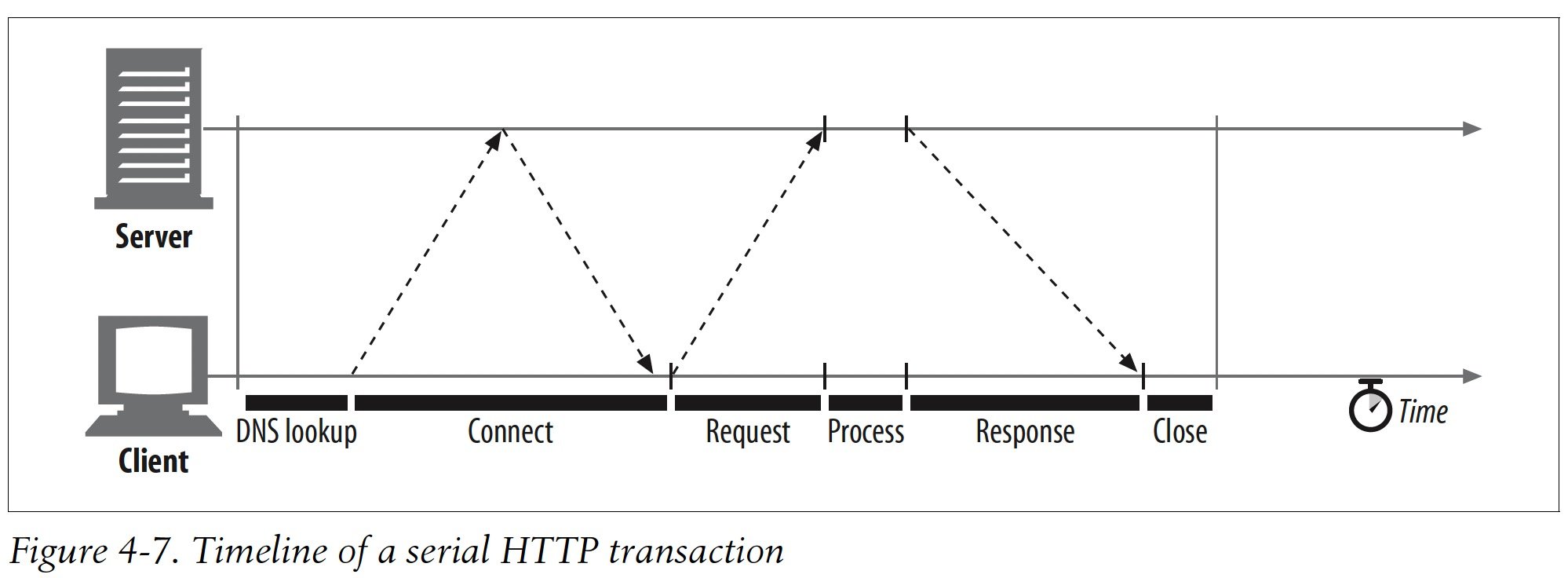

2.1 HTTP Transaction Delays

2.2 Performance Focus Areas

The TCP connection setup handshake

TCP slow-start congestion control

Nagle's algorithm for data aggregation

TCP's delayed acknowledgment algorithm for piggybacked ackknowledgments

TIME_WAIT delays and port exhaustion

3. HTTP Connection Handling

3.1 The Oft-Misunderstood Connection Header

In some cases, two adjacent HTTP applications may want to apply a set of options to their shared connection. The HTTP Connection header field has a comma-separated list of connection tokens that specify options for the connection that aren't propagated to other connections.

Three different ttypes of tokens:

HTTP header field names, listing headers relevant for only this connection

Arbitrary token values, describing nonstandard options for this connection

The value

close, indicating the persistent connection will be closed when done

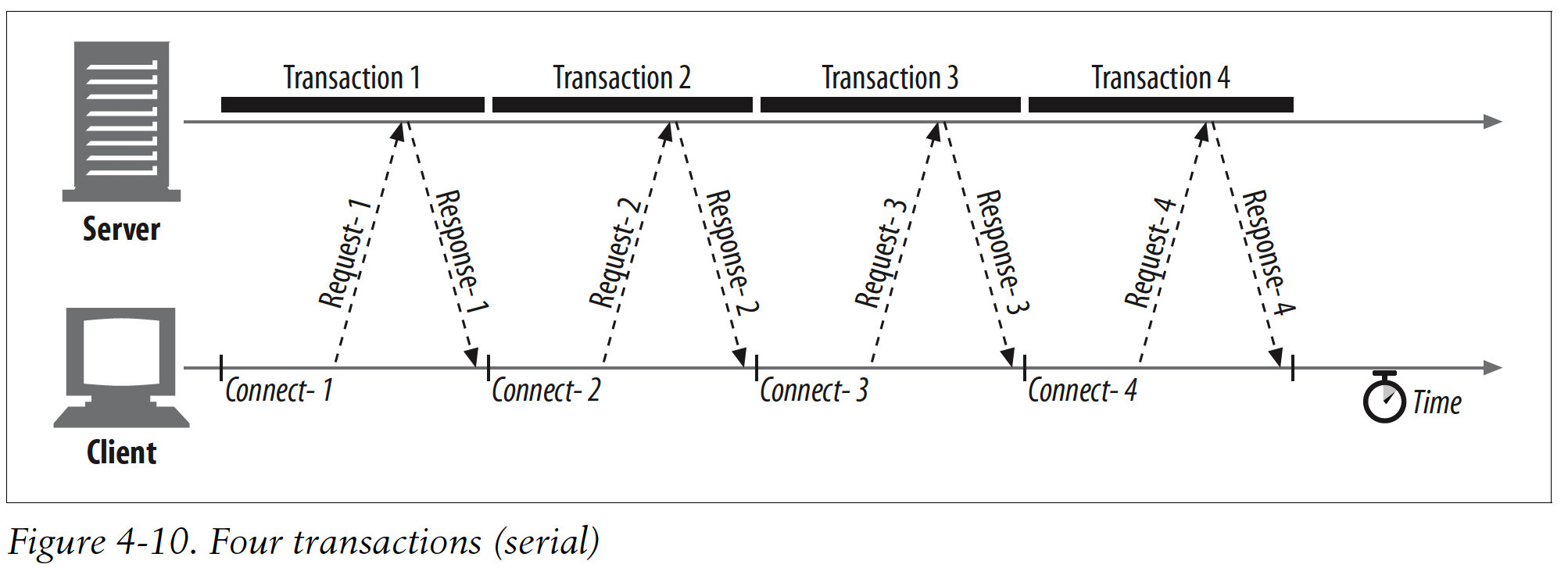

3.2 Serial Transaction Delays

Techniques to improve HTTP connection performance:

Parallel connections

Concurrent HTTP requests across multiple TCP connections

Persistent connections

Reusing TCP connections to eliminate connect/close delays

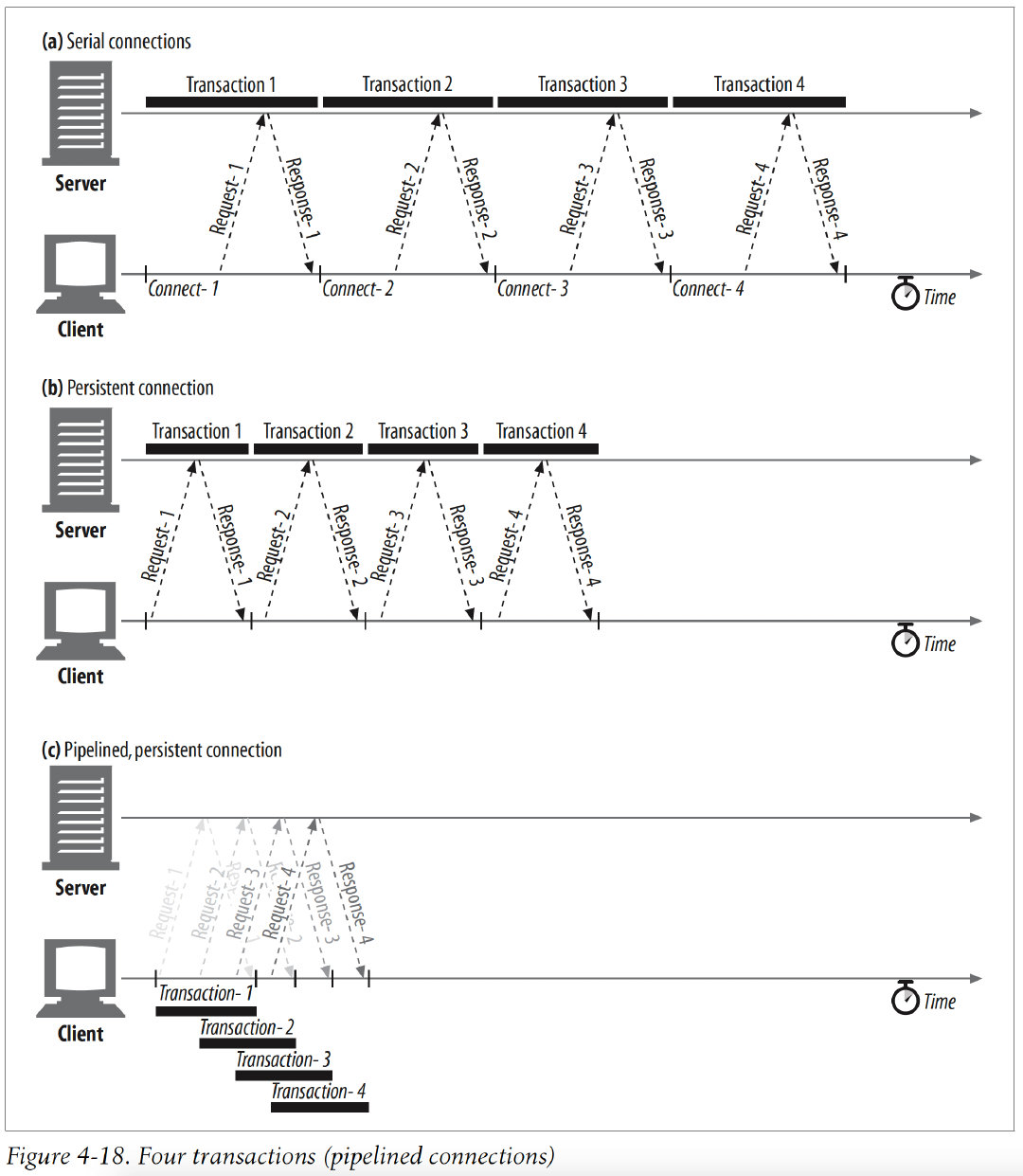

Pipelined connections

Concurrent HTTP requests across a shared TCP connection

Multiplexed connections

Interleaving chunks of requests and responses(experimental)

4. Parallel Connections

HTTP allows clients to open multiple connections and perform multiple HTTP transactions in parallel, with each transaction getting its own TCP connection.

4.1 Parallel Connections May Make Pages Load Faster

The connection delays are overlapped.

4.2 Parallel Connections Are Not Always Faster

In practice, browsers do use parallel connections, but they limit the total number of parallel connections to a small number(often four). Servers are free to close excesive connections from a particular client.

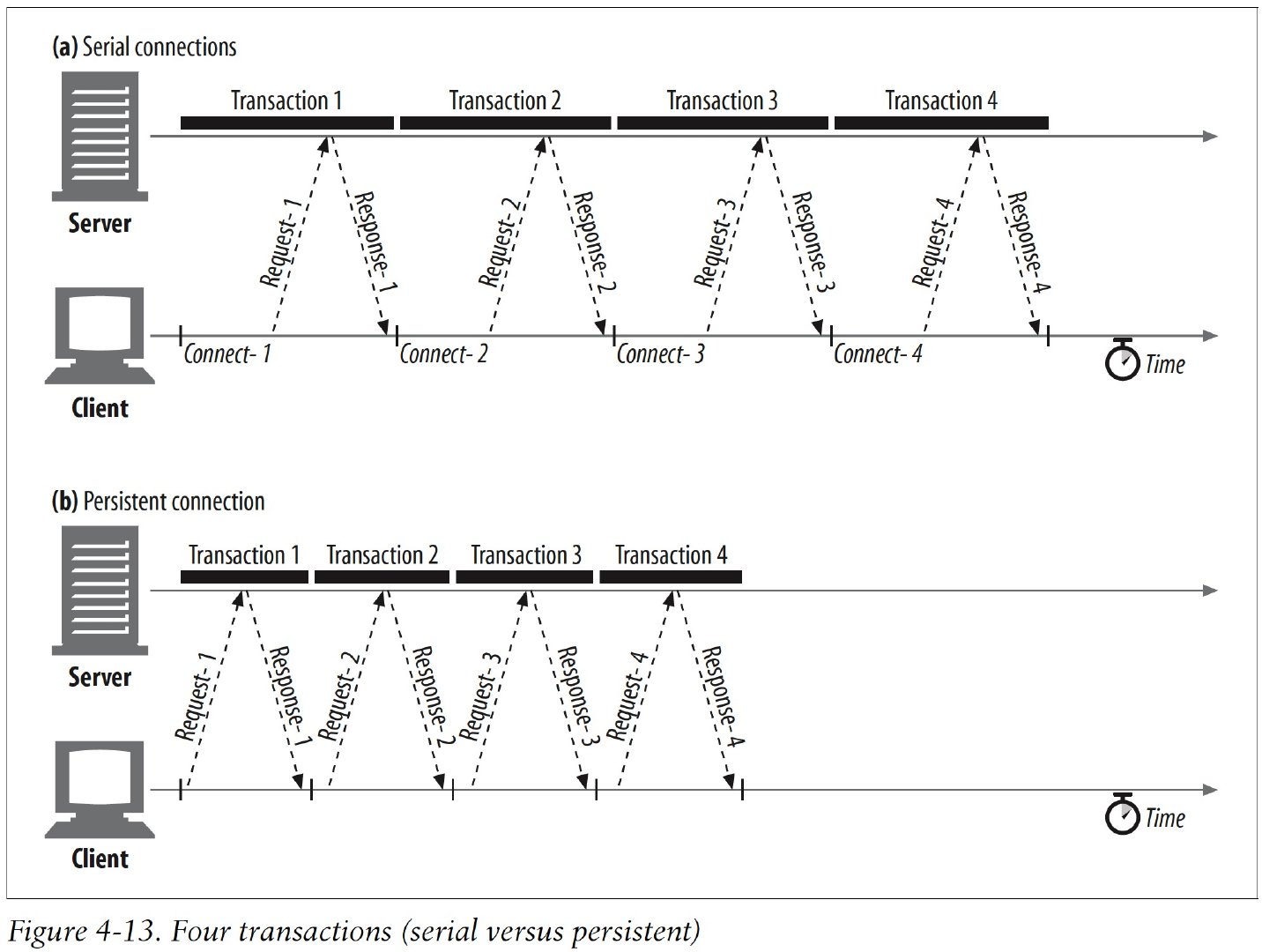

5. Persistent Connections

HTTP/1.1 allows HTTP devices to keep TCP connections open after transactions complete and to reuse the preexisting connections for future HTTP requests.

TCP connections that are kept open after transactions complete are called persistent connections, persistent connections stay open across transactions, until either the client or the server decides to close them.

5.1 Persistent Versus Parallel Connections

Persistent connections need to be managed with care, or you may end up accumulating a large number of idle connections.

Persistent connections can be most effective when used in conjunction with parallel connections.

Two types of persistent connections:

older HTTP/1.0+ "keep-alive" connections

modern HTTP/1.1 "persistent" connections

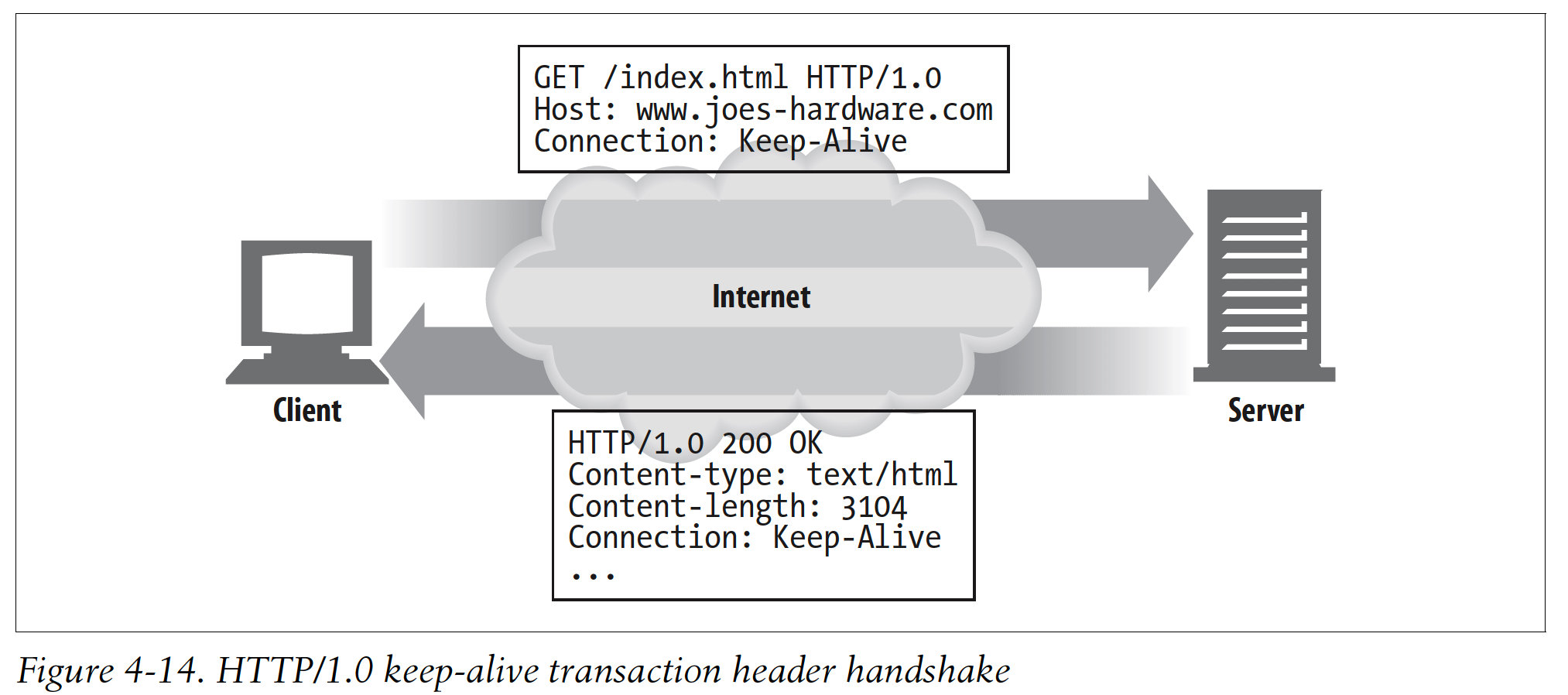

5.2 HTTP/1.0+ Keep-Alive Connections

5.3 Keep-Alive Operation

Keep-alive is deprecated and no longer documented in the current HTTP/1.1 specification.

Clients implementing HTTP/1.0 keep-alive connections can request that a connection be kept open by including the Connection: Keep-Alive request header.

If the server is willing to keep the connection open for the next request, it will respond with the same header in the response:

5.4 Keep-Alive Options

The keep-alive behavior can be tuned by comma-separated options specified in the Keep-Alive general header:

The

timeoutparameter is sent in a Keep-Alive response header. How long the server is likely to keep the connction alive for. No guarantee.The

maxparameter is sent in a Keep-Alive response header. How many more HTTP transactions the server is likely to keep the connection alive for. No guarantee.

5.5 Keep-Alive Connection Restrictions and Rules

Keep-alive doesn't happen by default in HTTP/1.0. The client must send a Connection: Keep-Alive request header to activate keep-alive connections.

If the client does not send a Connection: Keep-Alive header, the server will close the connection after that request.

Clients can tell if the server will close the connection after the response by detecting the absence of the Connection: Keep-Alive response header.

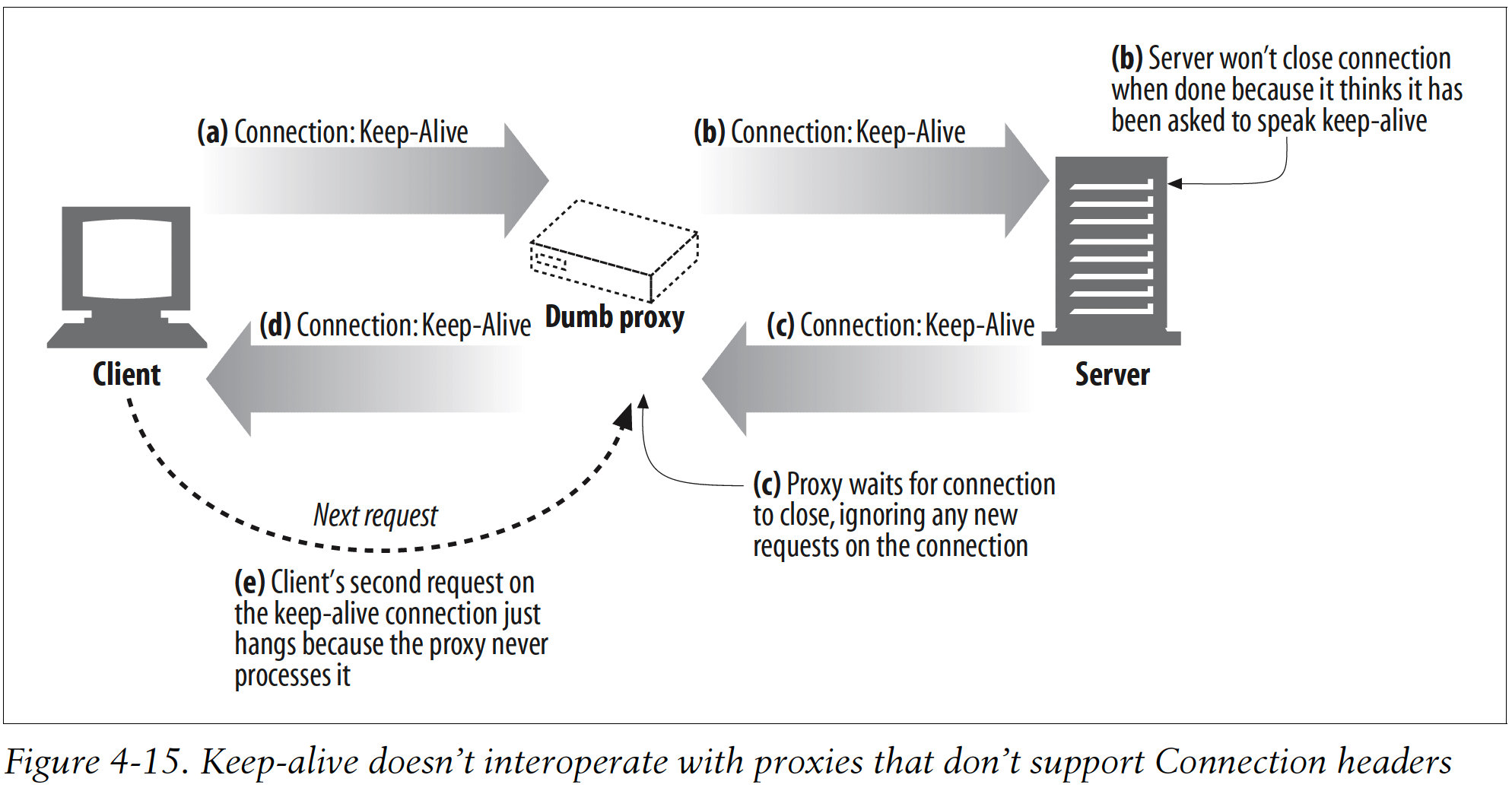

5.6 Keep-Alive and Dumb Proxies

5.6.1 The Connection header and blind relays

Many older or simple proxies act as blind relays, tunneling bytes from one connection to another, without specially processing the Connection header:

Client sends a message to the proxy, including the Connection: Keep-Alive header. The client waits for a response to learn if its requet for a keep-alive channel was granted.

The dumb proxy get the HTTP request, but doesn't understand the Connection header, and passes the message to the server. But the Connection header is a hop-by-hop header; it applies to only a single transport link and shouldn't be passed down the chain.

When the server receives the proxied Connection: Keep-Alive header, it mistakenly concludes that the proxy wants to speak keep-alive, and sends a Connection: Keep-Alive response to proxy. But the proxy doesn't know about keep-alive.

The dumb proxy relays the web server's response message back to the client, passing along the Connection: Keep-Alive header from the server. The client assumes the proxy has agreed to speak keep-alive. So at this point, both the client and server believe they are speaking keep-alive, but the proxy they are talking to doesnn't know anything about keep-alive.

The proxy relfects all the data it receives back to the client and then waits for the origin server to close the connection. But the servser will not close the connection. So the proxy will hang waiting for the connection to close.

When the client gets the response, it moves right along to the next request, sending another request to the proxy on the keep-alive connection. Becasue the proxy never expects another request on the same connection, the request is ignored and the browser just spins, making no progress.

Causing the browser to hang until the client or server times out the connection and closes it.

5.6.2 Proxies and hop-by-hpo headers

Modern proxies must never proxy the Connection header or any headers whose names appear inside the Connection values.

If a proxy receives a Connection: Keep-Alive header, it shouldn't proxy either the Connection header or any headers named Keep-Alive.

5.7 The Proxy-Connection Hack

Dumb proxies get into trouble because they blindly forward hop-by-hop headers such as Connection: Keep-Alive. Hop-by-hop headers are relevant only for that single, particular connection and must not be forwarded.

This causes trouble when the forwarded headers are misinterpreted by downstream servers as requests from the proxy itself to control its connection.

5.8 HTTP/1.1 Persistent Connections

HTTP/1.1 persistent connections are active by default. HTTP/1.1 assumes all connctions are persistent unless otherwise indicated.

HTTP/1.1 applications have to explicitly add a Connection: close header to a message to indicate that a connection should close after the transaction is complete.

Clients and servers still can close idle connections at any time.

5.9 Persistent Connection Restrictions and Rules

After sending a Connection: close request header, the client can't send more requests on that connection.

If a client doesn't want to send another request on the connection. it should send a Connection: close request header in the final request.

The connection can be kept persistent only if all messages on the connction have a correct, self-defined message length.

HTTP/1.1 proxies must manage persistent connections separately with clients and servers. Each persistent connection applies to a single transport hop.

HTTP/1.1 proxy servers should not establish persistent connections with an HTTP/1.0 client. But many vendors bend this rule.

HTTP/1.1 devices may close the connection at any time, though server should try not to close in the middle of transmitting a message and should always respond to at least one request before closing.

HTTP/1.1 applications must be able to recover from asynchronous closes. Clients should retry the requests as long as they don't have side effects that could accumulate.

Clients must be prepared to retry requests if the connection closes before they receive the entire response, unless the request could have side effects if repeated.

A single user client should maintain at most two persistent connections to any server or proxy.

6. Pipelined Connections

Multiple requests can be enqueued before the response arrive. While the first request is streaming across the network to a server on the other side of the globe, the second and third requests can get underway.

Reducing network round trips:

Restrictions for pipelining:

HTTP clients should not pipeline until they are sure the conneciton is persistent.

HTTP responses must be returned in the same order as the requestss(HTTP messages are not tagged with sequence numbers).

HTTP clients must be prepared for the connection to close at any time and be prepared to redo any pipelined requests that did not finish.

HTTP clients should not pipeline requests that have side effects(such as POSTs, reason is the last one).

7. The Mysteries of Connection Close

7.1 “At Will” Disconnection

Any HTTP client, server, or proxy can close a TCP transport connection at any time.

7.2 Content-Length and Truncation

Each HTTP response should have an accurate Content-Length header to describe the size of the response body.

When a client or proxy receives an HTTP response terminating in connection close, and the actual transferred entity length doesn't match the Content-Length, the receiver should question the correctness of the length.

If the receiver is a caching proxy, the receiver should not cache the response. The proxy should forward the questionable message intact, without attempting to "correct" the Content-Length, to maintain semantic transparency.

7.3 Connection Close Tolerance, Retries, and Idempotency

Connections can close at any time, even in non-error conditions.

If a transport connection closes while the client is performing a transaction, the client should reopen the connction and retry one time, unless the transaction has side effects.

A transaction is idempotent if it yields the same result regardless of whether it is executed once or many times.

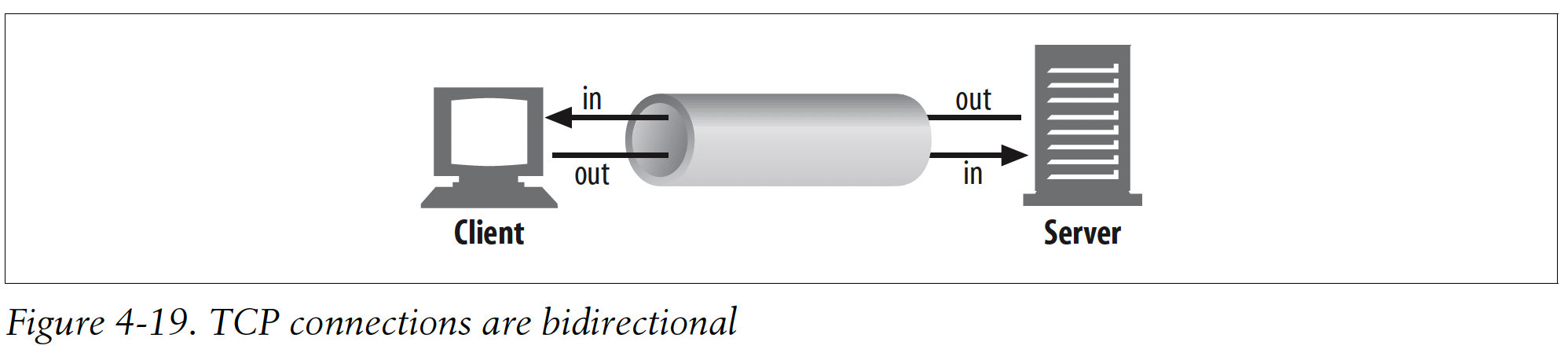

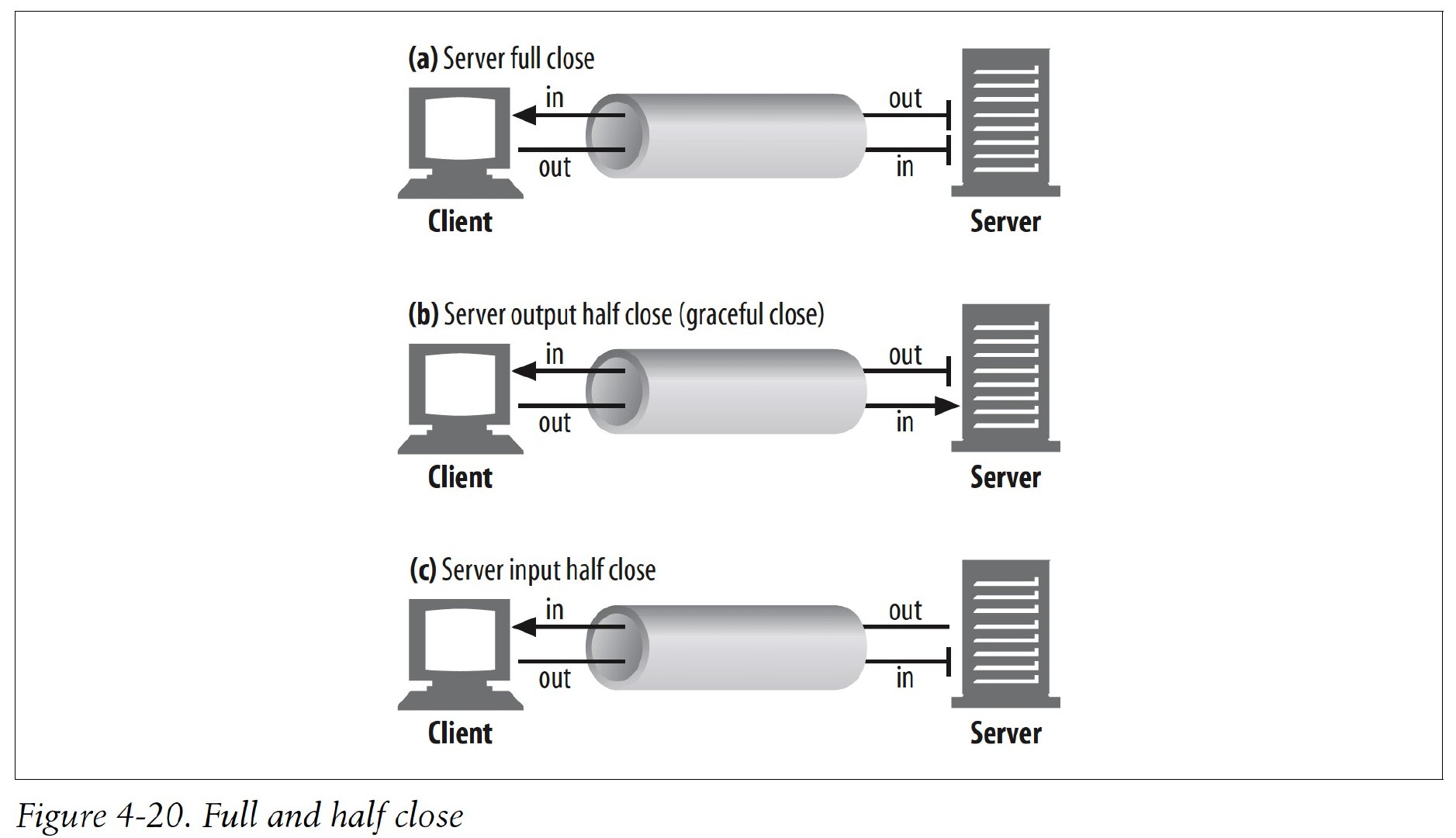

7.4 Graceful Connection Close

7.4.1 Full and half closes

A close() sockets call closes both the input and output channels of a TCP connection. This is called a "full close" and is depicted in a.

Use the shutdown() sockets call to close either the input or output channel individually. This is called a "half close" and is depicted in b.

7.4.2 TCP close and reset errors

Closing the output channel is always safe.

Closing the input channel is riskier.

7.4.3 Graceful close

In general, applications implementing graceful closes will first close their output channels and then wait for the peer on the other side of the connection to close its output channels.

Applications wanting to close gracefully should half close their output channels and periodically check the status of their input channels. If the input channel isn't closed by the peer within some timeout period, the application may force connection close to save resources.

Last updated